The Best Apple Vision Pro Feature Is Now Available on Your iPhone and iPad

Accessibility

Quick Links

-

What Is Eye Tracking on Your iPhone?

-

How to Set Up and Calibrate Eye Tracking on an iPhone or iPad

-

How to Use Eye Tracking on Your iPhone

-

How to Turn Off Eye Tracking on an iPhone

-

Tips for Better Eye Tracking on Your iPhone

Key Takeaways

- Eye tracking on iPhone & iPad is accessible with iOS 18, allows you to control your device with your eyes.

- Enable eye tracking in settings, calibrate, and use Dwell Control for hands-free navigation.

- Customize eye tracking preferences for a more efficient experience, especially for those with motor difficulties.

Precision eye tracking works so well on Vision Pro that Apple decided to bring it to the iPhone and iPad. As an accessibility feature, eye tracking works across the system, including in built-in and third-party apps. Here’s how to set up and use it.

What Is Eye Tracking on Your iPhone?

Part of a new set of accessibility features in iOS 18, eye tracking lets you “navigate through the elements of an app and use Dwell Control to activate each element, accessing additional functions such as physical buttons, swipes, and other gestures” with your eyes, so says Apple.

Eye tracking is available on all iPhone models from the iPhone 12 family (2020) onward, including the third-generation iPhone SE (2022). On iPad, eye tracking supports iPad mini 6 (2021) and later, iPad 10 (2022), iPad Air 4 (2020) and later, the third-generation 11-inch iPad Pro (2021) and later, and the fifth-generation 12.9-inch iPad Pro (2021) and newer.

Eye Tracking doesn’t work in

iPhone Mirroring

.

How to Set Up and Calibrate Eye Tracking on an iPhone or iPad

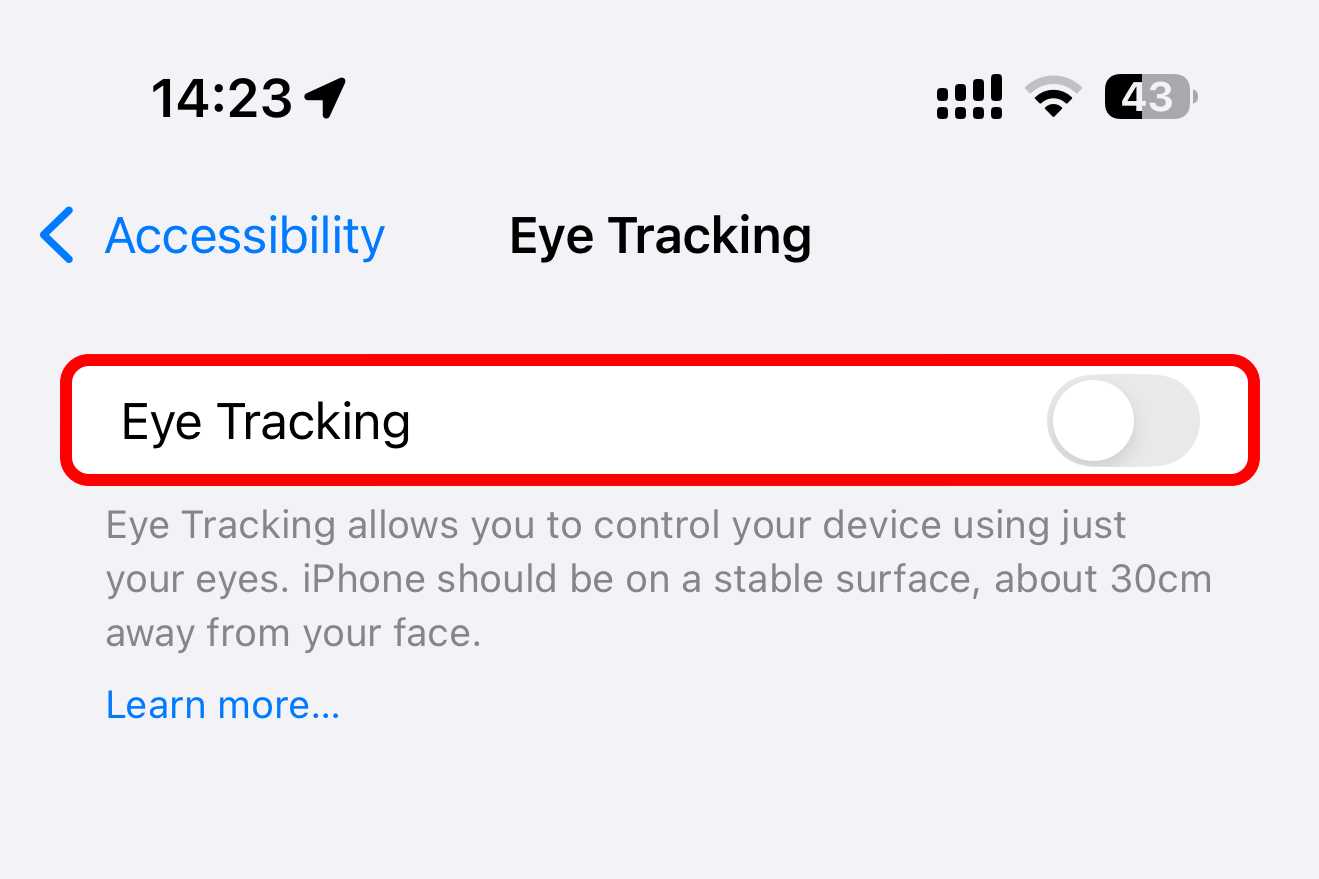

Eye tracking must be enabled in your accessibility settings and calibrated before you can use it. Venture into Settings > Accessibility and select the Eye Tracking option under the Physical and Motor section, then turn on “Eye Tracking” to enable the feature.

You’ll be prompted to calibrate eye tracking by following a dot that pops up in different onscreen locations (avoid blinking during the training process). As you focus on the dot, the front camera captures eye movement to pinpoint the direction of your gaze.

All eye tracking data stays on your device to protect your privacy and is never uploaded to servers or shared with Apple and other companies.

For best results when calibrating eye-tracking:

- Be still while setting the feature up and don’t tilt your head in any direction.

- Don’t hold the device in your hands; put it on a flat surface instead.

- If you have an iPhone MagSafe stand, such as Satechi’s 2-in-2 folding Qi2 stand, or a foldable cover for your iPad, use that for calibration.

- Hold your iPhone at arm’s length, approximately 12 inches (about 30cm), and your iPad about a foot and a half (about 32cm) away from your face.

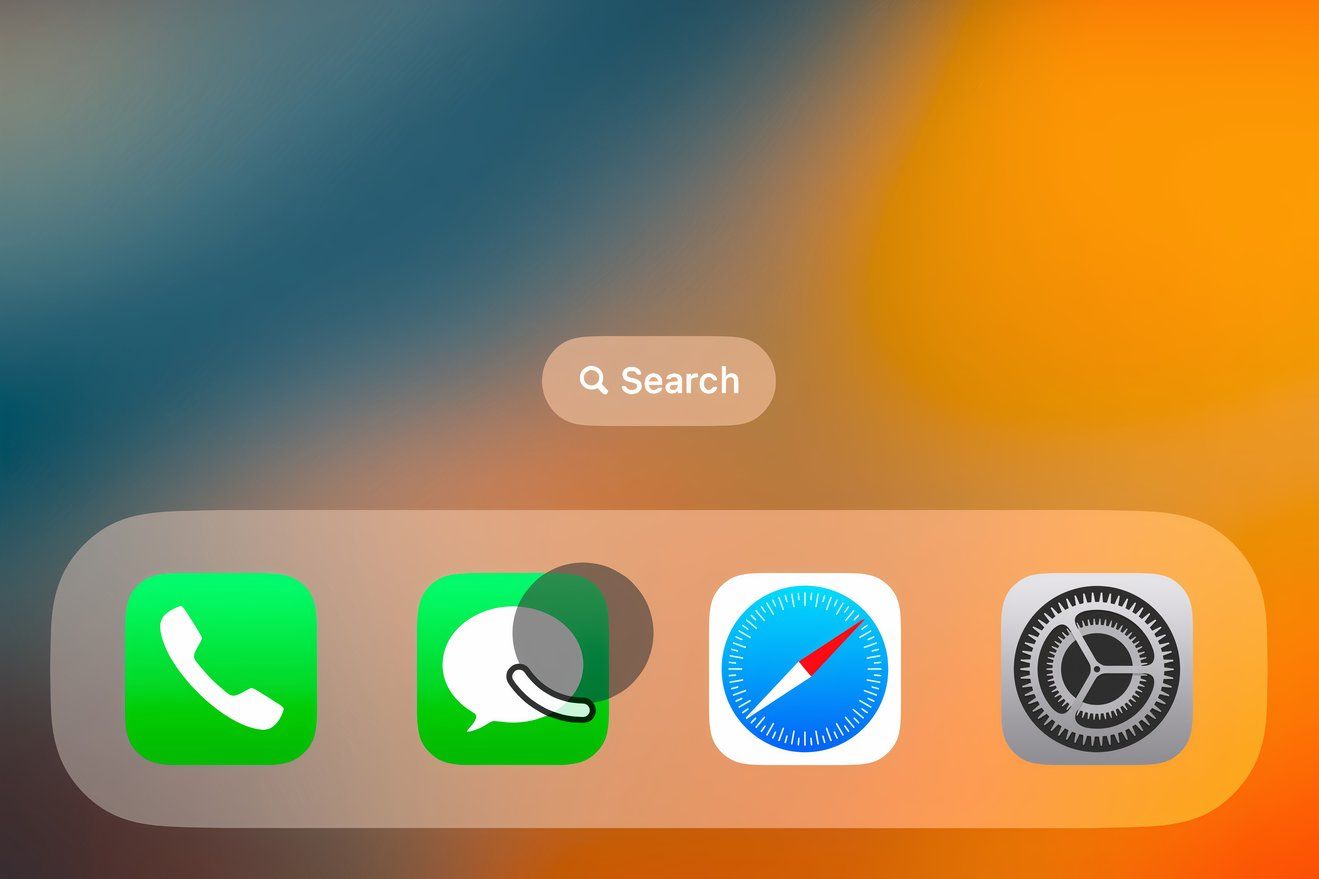

With eye tracking calibrated, a transparent dot appears on the screen. That’s your eye tracking pointer. You’ll also notice a black rectangle outline around the nearest user interface item you’re currently staring at. Try looking around the screen to check how it works.

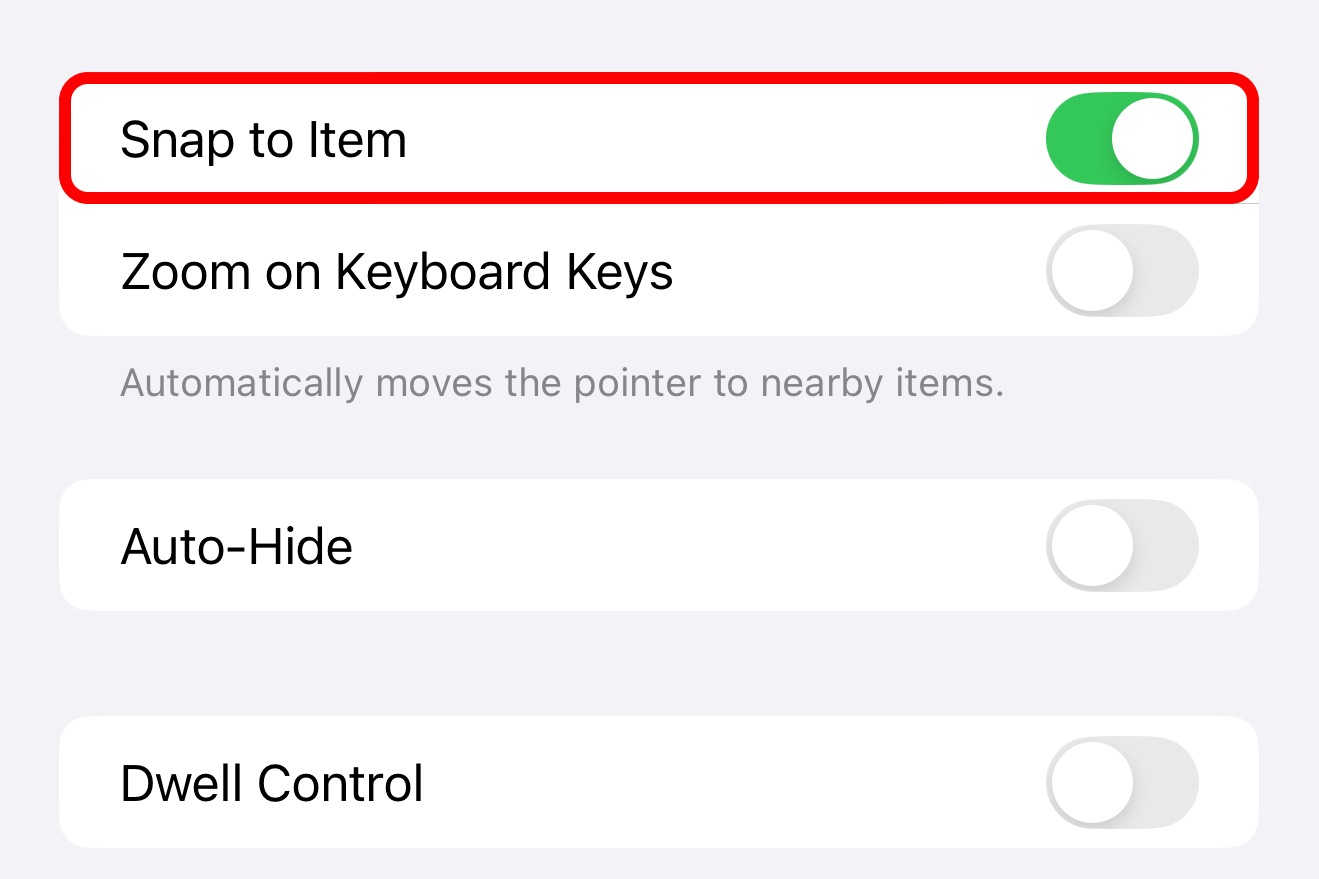

Save yourself the frustration and leave “Snap to Item” enabled to ensure the selection rectangle snaps to the nearest onscreen element instead of jumping all over the place.

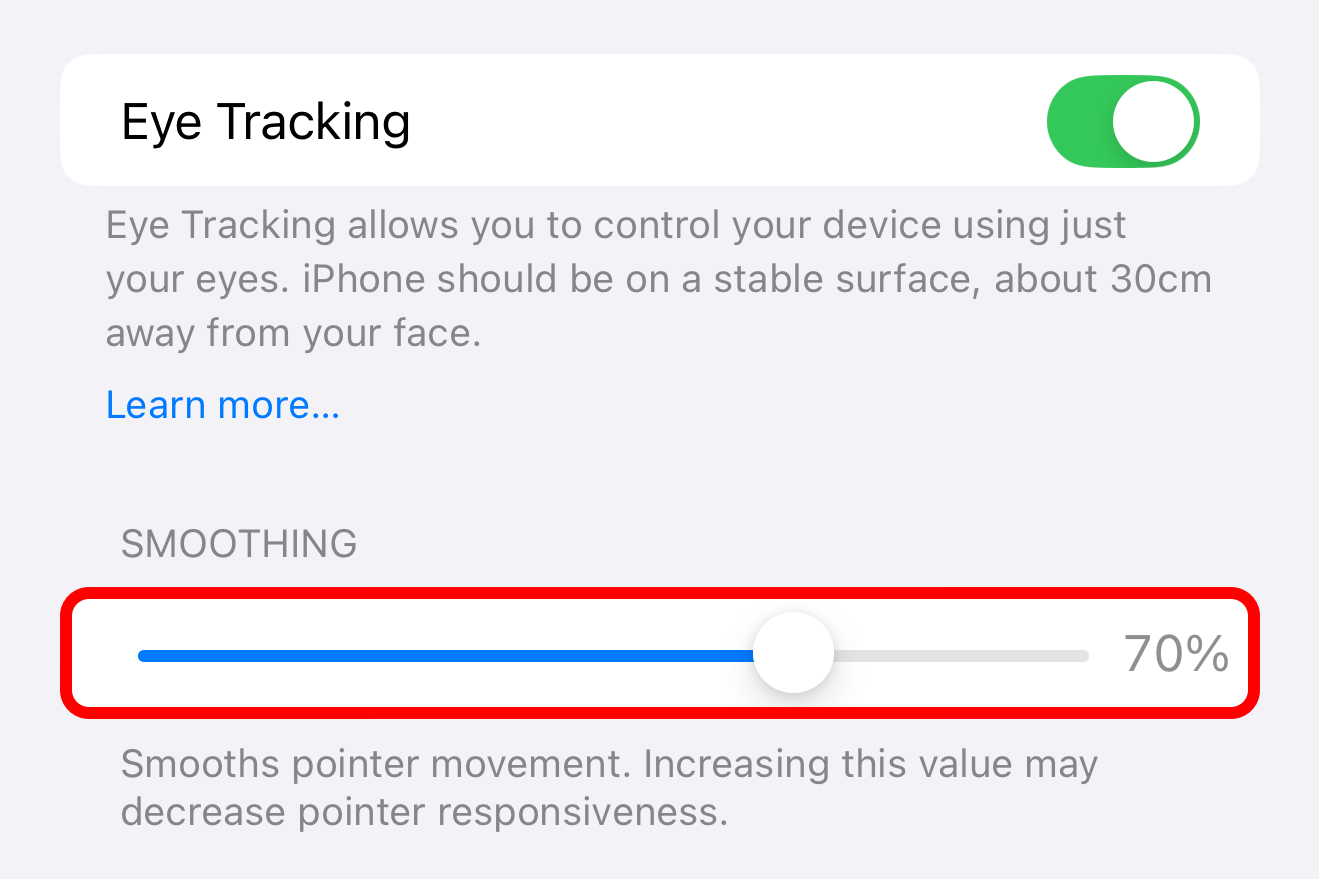

If the pointer feels jumpy, fine-tune it by dragging the Smoothing slider to the left or right to make the pointer smoother (but less responsive) and vice versa.

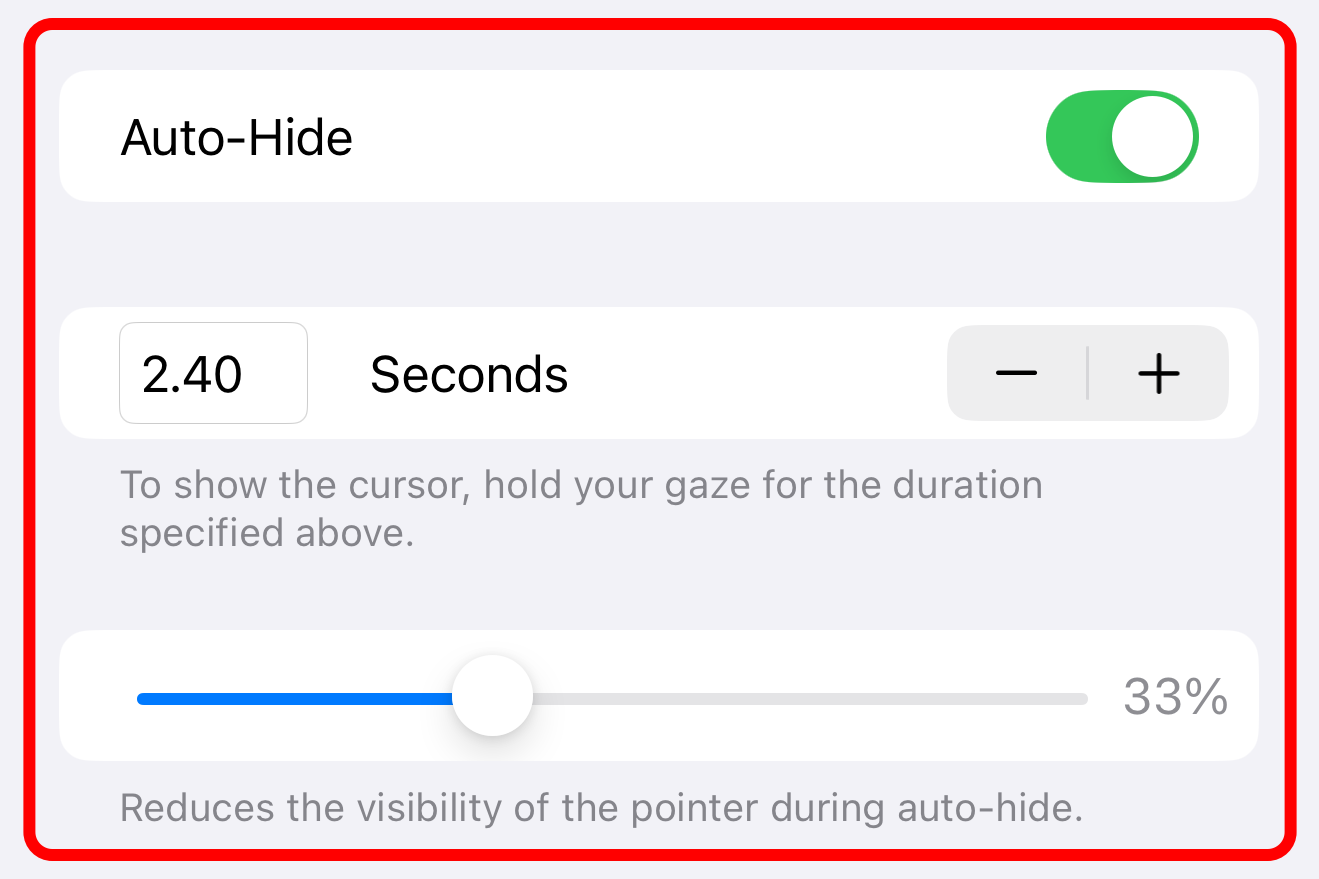

For an optimal experience, turn on “Auto-Hide” to automatically fade the eye tracking pointer when your eyes move. In the Seconds section, adjust how long you must hold the gaze for the pointer to reappear, the default being 0.5 seconds. The slider below lets you change pointer visibility during auto-hide from the default 40% transparency.

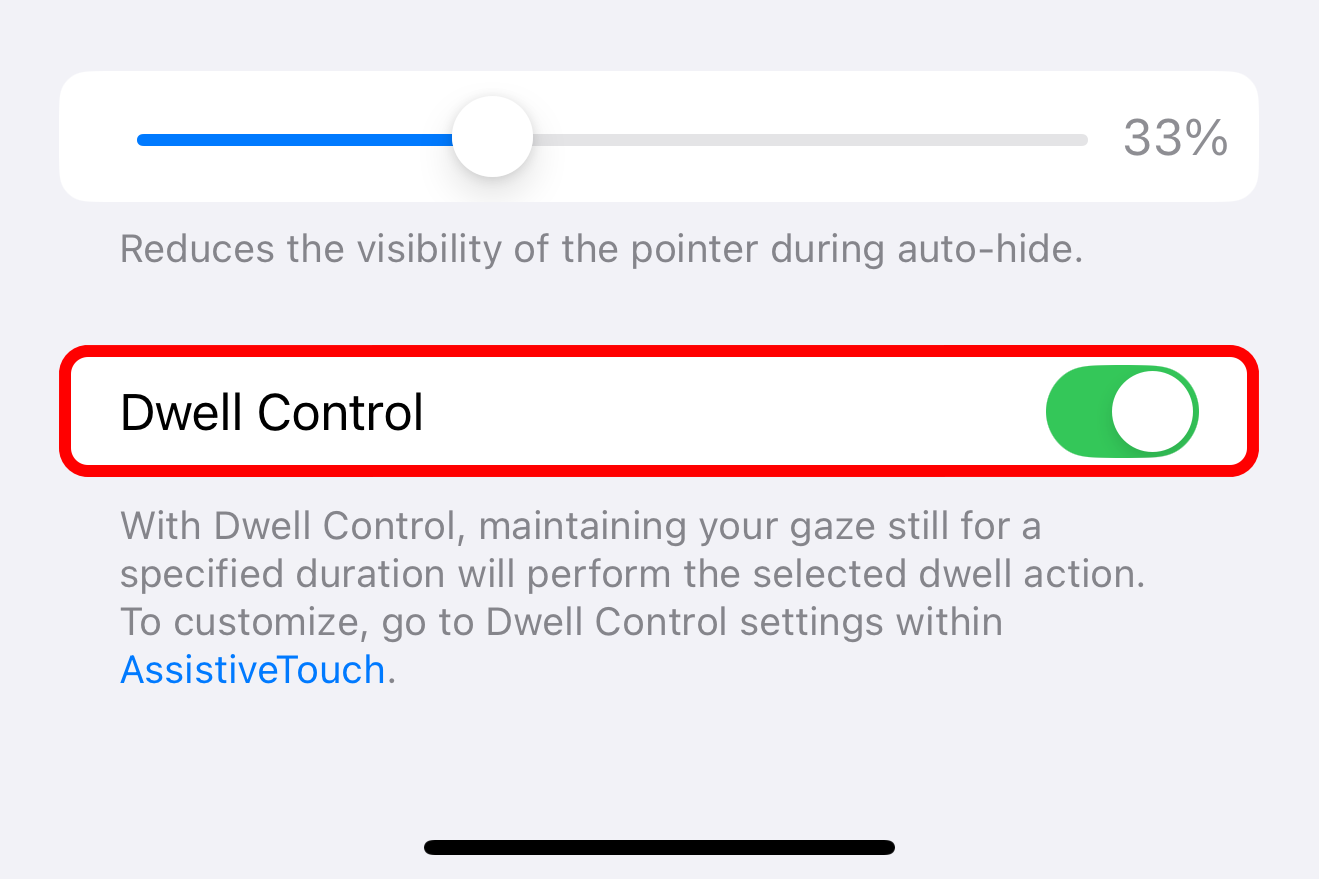

The most important thing to do after enabling eye tracking is to turn on “Dwell Control” at the bottom of the screen. This will enable you to select an item by holding your gaze for a while, like opening the AssistiveTouch menu with options to scroll, swipe, and more.

How to Use Eye Tracking on Your iPhone

With Eye Tracking set up, you can navigate your iPhone or iPad just with your gaze, with an onscreen pointer following the movement of your eyes.

To change the pointer size and color, go to Settings > Accessibility > Pointer Control.

Look at an interface element like a button or a text field and hold your gaze (also known as “dwell”) to trigger it. When dwelling, an outline appears around the item and starts filling. When the dwell timer finishes, the default tap action is performed.

To change the dwell timer, go to Settings > Accessibility > Touch > AssistiveTouch, scroll near the bottom, and use the “+” and “-” buttons to adjust it in 0.05 second increments (the default is 1.5 seconds and the maximum four).

To access additional actions like scrolling, dwell on the AssistiveTouch gray dot.

Using AssistiveTouch to Swipe and Scroll With Your Eyes

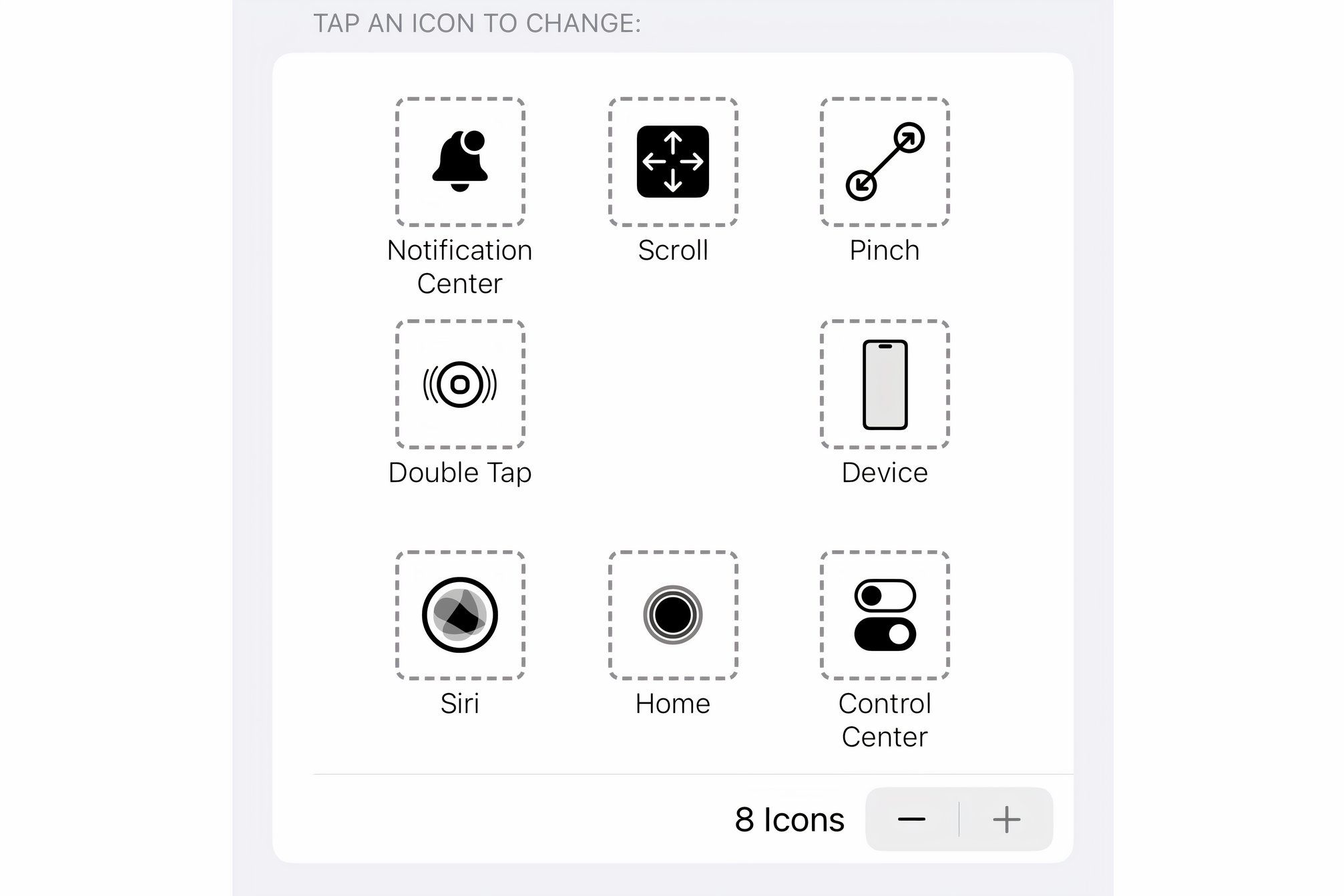

To optimize your AssistiveTouch menu for eye tracking, go to Settings > Accessibility > Touch > AssistiveTouch > Customize Top Level Menu. Here, you can replace any option in the AssistiveTouch menu, such as “Camera,” with something more useful; hit an icon to pick a new action from the list, then hit “Done” to save the changes.

At the very least, add scroll gestures and the Home action to your AssistiveTouch menu to scroll and get to the home screen with your eyes.

You can only scroll with your eyes at a fixed speed. Inertia and the rubber-banding effect iOS is famous for are unavailable when eye tracking is active.

Take your time to pick actions that work best for you. For example, I’ve added “Double Tap,” “Hold and Drag,” “Control Center,” “Notification Center,” and “Siri” to my AssistiveTouch menu.

Activating Hot Corners With Eye Tracking

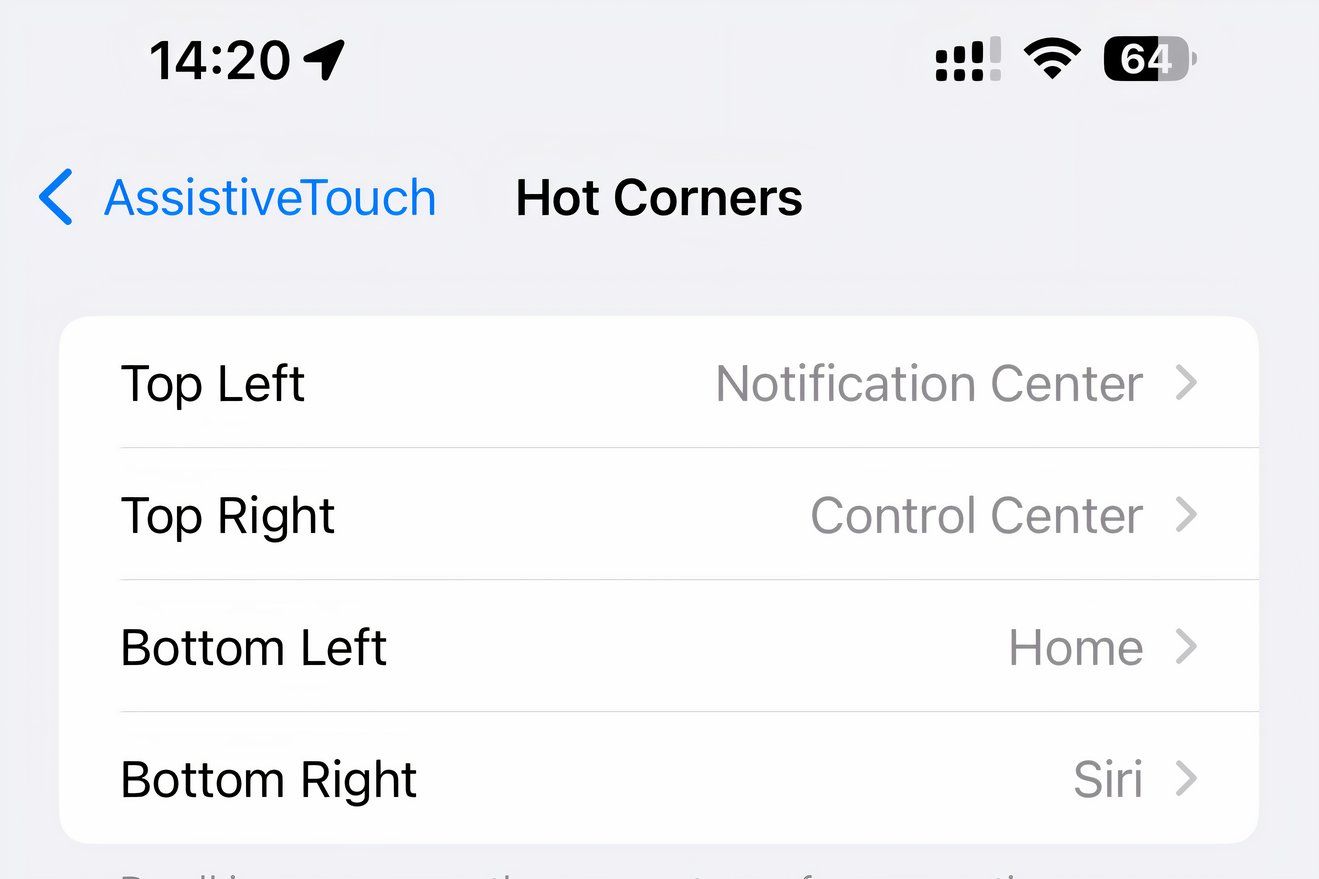

The Hot Corners feature allows you to trigger different actions by looking at each screen corner. To configure this feature to your liking, go to Settings > Accessibility > Touch > AssistiveTouch, scroll down and select “Hot Corners,” then select the Top Left, Top Right, Bottom Left, or Bottom Right option to assign it a desired system action.

Hot Corners automatically turns on when you enable AssistiveTouch and Eye Tracking.

Hot Corners are a great way to trigger frequently used actions without opening the AssistiveTouch menu. I’ve configured mine to automatically take me to the Home Screen, open the Control Center, access the Notification Center, and bring up Siri.

How to Turn Off Eye Tracking on an iPhone

To disable eye tracking, go to Settings > Accessibility > Eye Tracking and turn off “Eye Tracking.” You’ll need to undergo the training process every time you re-enable the feature. Unfortunately, there’s no Control Center toggle to disable Eye Tracking temporarily.

Keep in mind that eye tracking calibration will automatically start if you change the position of your device or face. You can also trigger calibration at any time by looking in the top-left corner of the screen and holding the gaze until the circle around the dwell pointer fills up (this handy shortcut is actually a Hot Corner action, and you can change it if you like).

Tips for Better Eye Tracking on Your iPhone

- If eye tracking is unreliable, try disabling and setting it up again.

- Eye tracking on an iPhone works much better in portrait mode than on an iPad because the camera is pointed straight at your face.

- Glasses wearers may achieve better results without glasses because light refractions and reflections from the screen can throw eye tracking off.

- Be sure your face is adequately lit, as eye tracking performs poorly in low-lit environments—especially with a nearby light source casting shadows on your face.

- The TrueDepth camera used for Face ID must have a clear view of your face for eye tracking to work reliably. Use a microfiber cloth or similar non-abrasive material to wipe the fingerprints and smudges on the front camera lens.

- For the best results, use specialized eye-tracking assistive hardware from companies such as TD Pilot, Eyetuitive, or similar MFI-based offerings.

As expected, eye tracking on iPhone and iPad is a rudimentary implementation that can be frustrating and clunky. It’s definitely not nearly as useful as on Vision Pro, where eye tracking is the primary interaction method.

But as an accessibility feature, eye tracking is a great option for people with motor difficulties to control their iPhone or iPad hands-free.