Benchmark Anything With This Powerful Linux Tool

Summary

- Hyperfine is a benchmarking tool that runs commands multiple times for accurate averages.

- Hyperfine is user-friendly and allows results to be exported in Markdown or JSON formats for further analysis.

- You can use Hyperfine to compare program efficiency, optimize code, and automate benchmarking.

If you need to know how fast it runs, Hyperfine will tell you. This user-friendly, versatile tool available on Linux takes all the effort out of benchmarking.

What Is Hyperfine?

Hyperfine is an open-source benchmarking tool for Linux, macOS, and Windows. Like the time command, which is available in most distros and shells, hyperfine will measure the amount of time it takes to run a program:

On the surface, hyperfine does the same job as time, with prettier output. But the tool has several advantages; Hyperfine:

- Runs a command several times, producing more accurate averages.

- Can test and compare several commands at once.

- Accounts for caching, outliers, and the effect of shell spawning on results.

- Can export results in Markdown or JSON formats for further analysis.

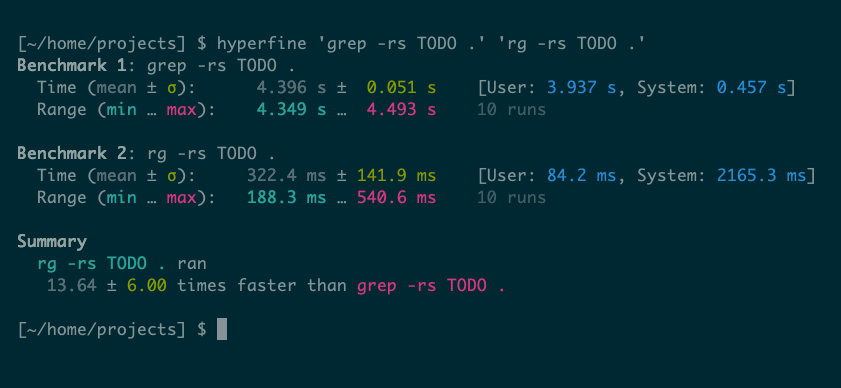

Hyperfine is particularly useful when you’re writing your own programs. You can try optimizations, test them using Hyperfine, and update your code accordingly. But you can also use it to choose between different programs, like grep vs. ripgrep for example:

How to Install and Use Hyperfine

Written in Rust, Hyperfine is a highly portable command that comes with man pages and modern command-line features like long-form options and tab completion.

Related

Why You Should Learn Rust, Especially If You’re New to Programming

Rust is one of the newest programming languages, and it can change how you see code.

Installation

Hyperfine packages are available for many Linux distributions and other OSes.

On Ubuntu, you can install Hyperfine from a .deb release, e.g.

wget https://github.com/sharkdp/hyperfine/releases/download/v1.19.0/hyperfine_1.19.0_amd64.deb

sudo dpkg -i hyperfine_1.19.0_amd64.deb

Check the project’s GitHub page for the latest release on your architecture.

On Fedora, you can use the dnf package manager to install Hyperfine with a simple command:

dnf install hyperfine

On macOS—or another system that supports it—you can use Homebrew:

brew install hyperfine

For other systems, check the project’s detailed installation instructions.

Basic Use of Hyperfine

Hyperfine spawns a subshell to run the commands you pass it. You don’t need to think about this too much, but it does mean you should run Hyperfine as follows:

hyperfine command-in-path

# OR

hyperfine /full/path/to/command

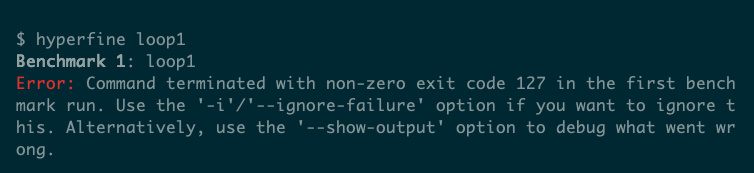

In particular, this means that you can’t just run Hyperfine against a program in your current directory by passing its name as an argument:

hyperfine a.out

If you try this, you’ll get an error like “Command terminated with non-zero exit code 127,” which can be difficult to debug.

Instead, just pass a path to the command, e.g.

hyperfine ./a.out

For similar reasons, it’s best to quote your commands:

hyperfine "du -skh ~"

How Benchmarking With Hyperfine Can Help You

Benchmarking can be as accurate as you choose to make it, whether you’re marketing a product, arguing for a code change, or merely interested in how quickly you can run grep across your hard drive.

Checking the Efficiency of Your Code

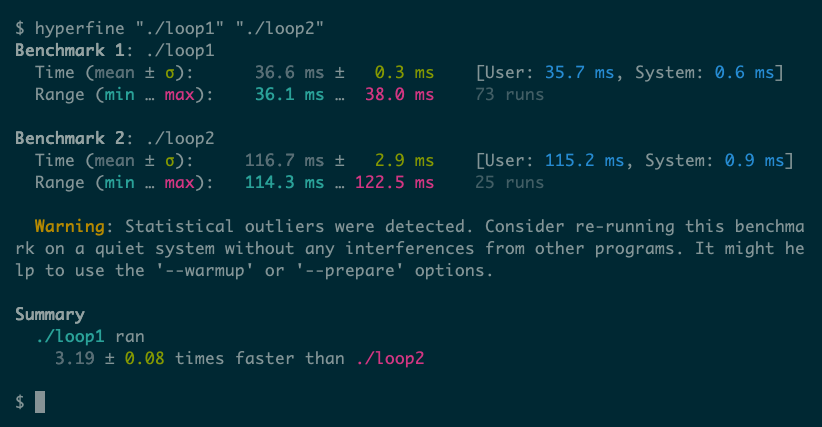

Hyperfine is great at comparing results from two versions of a program under the same conditions. Using it, you can refine your program logic and optimize your code.

Our article on the Linux time command includes two sample C programs that you can use to demonstrate this concept. The first, loop1.c, iterates over a string a fixed number of times (500,000), counting the number of hyphens:

#include "stdio.h"

#include "string.h"

int main() {

char szString[] = "how-to-geek-how-to-geek-how-to-geek-how-to-geek";

int i, j, len = strlen(szString), count = 0;

for (j = 0; j < 500000; j++)

for (i = 0; i < len; i++)

if (szString[i] == '-')

count++;

printf("Counted %d hyphensn", count);

}

The second, loop2.c, is very similar, but it calls strlen() directly in the loop condition:

#include "stdio.h"

#include "string.h"

int main() {

char szString[] = "how-to-geek-how-to-geek-how-to-geek-how-to-geek";

int i, j, count = 0;

for (j = 0; j < 500000; j++)

for (i = 0; i < strlen(szString); i++)

if (szString[i] == '-')

count++;

printf("Counted %d hyphensn", count);

}

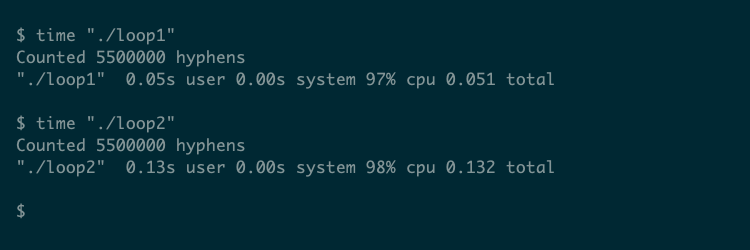

Because the function call is now inside the outer loop, strlen() runs 500,000 times instead of just once. The time command can give basic information about how these two versions of the same program compare:

But hyperfine gives more detail, even in the most basic, default mode:

Timing Long-Running Commands

Although Hyperfine is particularly interesting to programmers and system administrators, you can use it in other circumstances. For example, if you ever use a long-running command and background it while you work, you probably don’t know how much time it’s actually taking. Getting into the habit of running it through Hyperfine can help:

For a long-running command, you’ll probably want to limit the number of times Hyperfine runs it. By default, the tool uses its own heuristics to determine a good number of runs for accurate results. You can override this using the –runs option:

hyperfine --runs 1 'du -skh ~'

Dealing With Warnings and Errors

Hyperfine is very keen on reporting warnings, probably because it aims to provide highly accurate statistics. But you may not always care too much about getting a rigorously scientific result; sometimes, just gaining a vague understanding is enough. Hyperfine provides options that cater to such a case.

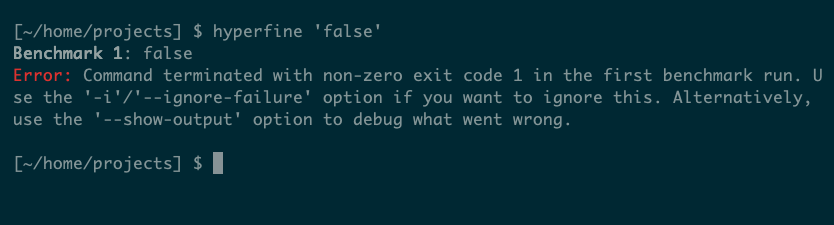

One such case is a command returning a non-zero exit code, which typically indicates that the command has failed. In such cases, Hyperfine will report an error:

As the error message explains, you can ignore such errors using the -i option or try to diagnose them using –show-output. Depending on what you’re doing, the former is probably good enough.

For example, if the previous du example throws an error, it will still run until completion. If you run du across your entire disk, it can fail with a permission error on a single file, rendering the run useless. Note that running with -i will still produce a warning:

Warning: Ignoring non-zero exit code.

You may also see errors relating to how Hyperfine runs while the cache is cold or other programs are running. The –warmup option will run your command a number of times before it starts benchmarking, which helps to warm the cache and can produce more realistic results.

You can also do the opposite to measure the worst case. The –prepare option lets you run another command during each benchmarking run; it’s up to you to provide a command that will clear any cache that may affect your results.

Working With Hyperfine’s Results

Hyperfine shows timing results in a nice compact, color-highlighted format that’s perfect for a quick check in your terminal. If you want to analyze the results further, though, you’ll want them in a better format.

The –export-json option lets you specify a file to store JSON-formatted results in. They will look something like this:

These results demonstrate that Hyperfine may run your command many more times than you’d expect. In this case, it ran ls over 400 times to build a comprehensive set of results.

You may even want to run this kind of benchmarking as a regular test, either against specific software or the current hardware you’re running it on. Setting up a cron job to automate benchmarking will make this much easier.